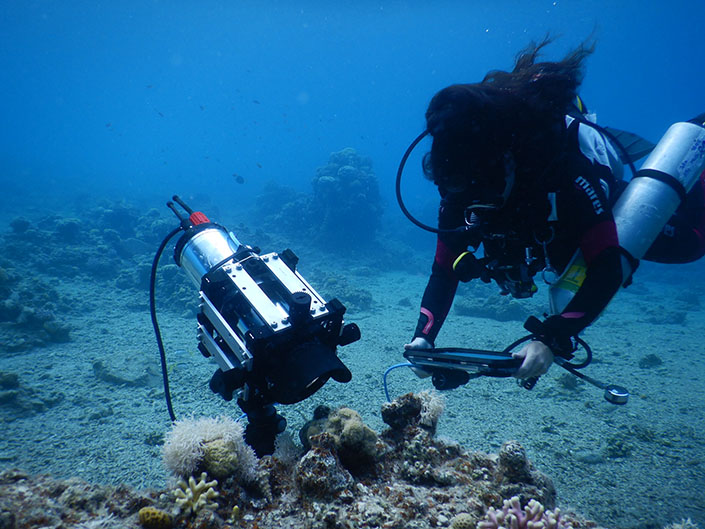

Diver-Operated Microscope Brings Hidden Coral Biology into Focus

The intricate, hidden processes that sustain coral life are being revealed through a new microscope developed by scientists at UC…

Fast Four Quiz: Precision Medicine in Cancer

Nuisance Seaweed Found to Produce Compounds with Biomedical Potential

A seaweed considered a threat to the healthy growth of coral reefs…

Bacteria ‘nanowires’ could help scientists develop green electronics

Engineered protein filaments originally produced by bacteria have been modified by scientists…

New chip promising for tumor-targeting research

This illustration shows the design of a new chip capable of simulating a tumor's "microenvironment"…

Study illuminates role of cancer drug decitabine in repairing damaged cells

A Purdue University study sheds light on how cell damage is reversed by the cancer drug decitabine and identifies a…

Sign Up for Free

Subscribe to our newsletter and don't miss out on our programs, webinars and trainings.

Chemicals produced by fires show potential to raise cancer risk

Derek Urwin has a special stake in his work as a cancer control researcher. After undergraduate studies in applied mathematics…

How SARS-CoV-2 Hijacks Human Cells to Evade Immune System

Researchers at University of California San Diego School of Medicine have discovered…

Your one-stop resource for medical news and education.

Bacteria ‘nanowires’ could help scientists develop green electronics

Engineered protein filaments originally produced by bacteria have been modified by scientists to conduct electricity. In a study published recently…

Nuisance Seaweed Found to Produce Compounds with Biomedical Potential

A seaweed considered a threat to the healthy growth of coral reefs in Hawaii may possess the ability to produce…

New tools used to identify childhood cancer genes

Using a new computational strategy, researchers at UT Southwestern Medical Center have identified 29 genetic…

Cotton-Based Hybrid Biofuel Cell Could Power Implantable Medical Devices

A glucose-powered biofuel cell that uses electrodes made from cotton fiber could someday help power…

New 3D printer uses rays of light to shape objects, transform product design

A new 3D printer uses light to transform gooey liquids into complex solid objects in…

Diver-Operated Microscope Brings Hidden Coral Biology into Focus

The intricate, hidden processes that sustain coral life are being revealed through a new microscope…

A fungal origin for coveted lac pigment

The colourful pigment extracted from the lac insect may actually be produced by a symbiotic…

Combination approach could overcome treatment resistance in deadly breast cancer

QIMR Berghofer-led research in collaboration with Australian oncology company, Kazia Therapeutics, has found that combining…

Tailored brain stimulation treatment results give new hope for people with depression

Medical researchers at QIMR Berghofer have achieved a significant milestone in the treatment of depression,…

Game-Changer in Emergency Medicine: New AI Test Flags Sepsis Hours Before Symptoms Worsen

When the body's reaction to an infection goes awry, it can result in sepsis, a…

Perfumes and lotions disrupt how body protects itself from indoor air pollutants

Fragrances and lotions don't just change the way people smell, they actively alter the indoor…

Medical Milestone: Surgeons Perform First-Ever Human Bladder Transplant

In a groundbreaking medical achievement, surgeons at the University of California, Los Angeles (UCLA) Health…

A Downside of Taurine: It Drives Leukemia Growth

A new scientific study identified taurine, which is made naturally in the body and consumed…